It’s going to be tricky trying to describe my process and methods for this project, because in my performance I’m describing my process and methods, but they aren’t actually what I did, they were just narrative devices. So I think I’ll start by going over my performance and what (in theory) was displayed in each section, then go on to the tech aspects. [may update later to include video, if I’m sent it, and when I finally get around to downloading gif software I’ll make gifs of the animations too, I suppose]

The Infinite Poem

to follow along on the webpage,

press the number key corresponding to the [numbers] found within some sections

Hello! My name is August Luhrs and this is The Infinite Poem. Well, this is my friend Margaret. We were born exactly two weeks apart from each other, in the same hospital in West Texas; actually, fun fact, she and her family were the first people I met on this planet besides my immediate family and the hospital staff. I practically grew up at their house, so calling her my friend seems a little off, but she wasn’t my sister by blood.

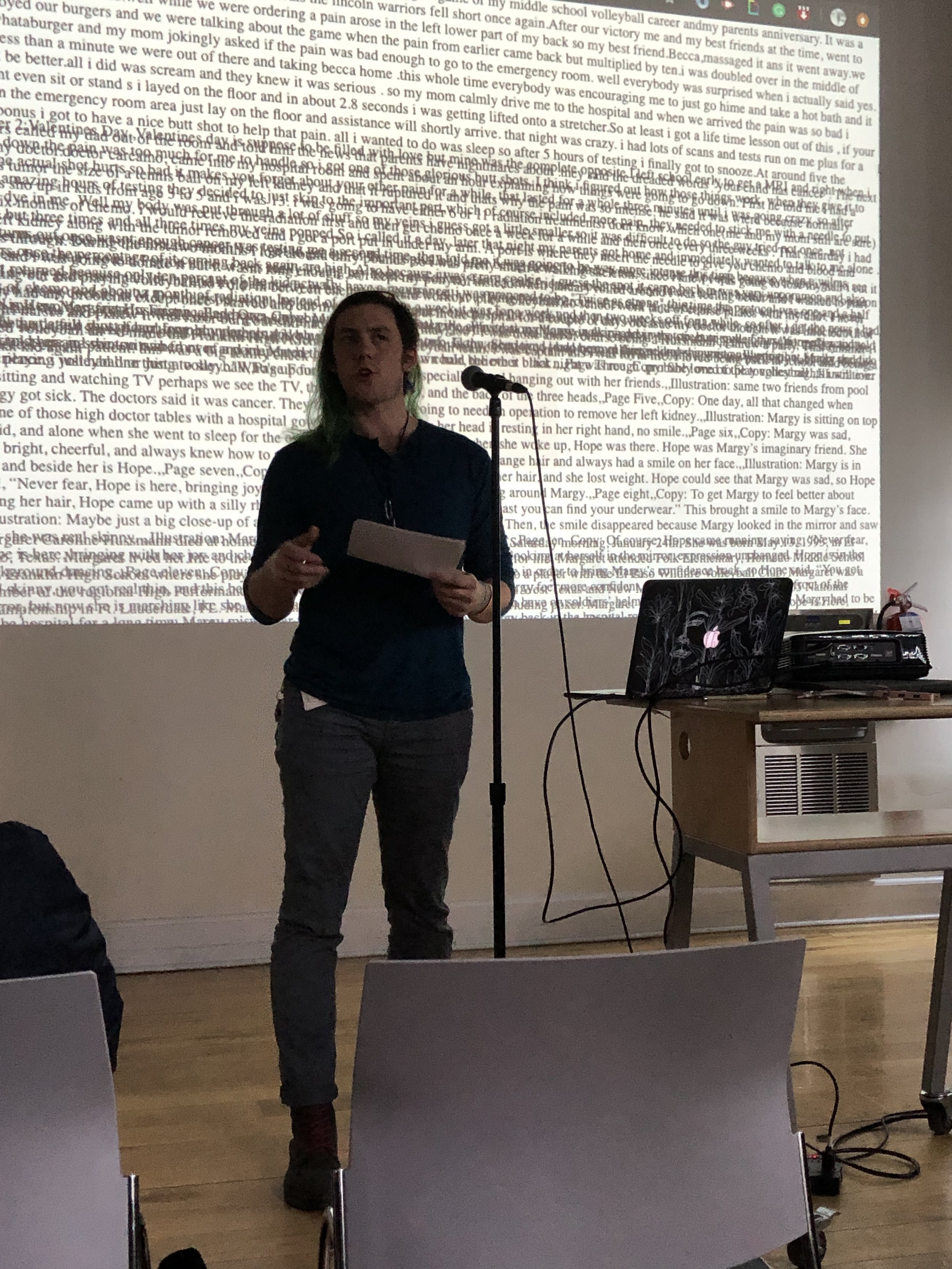

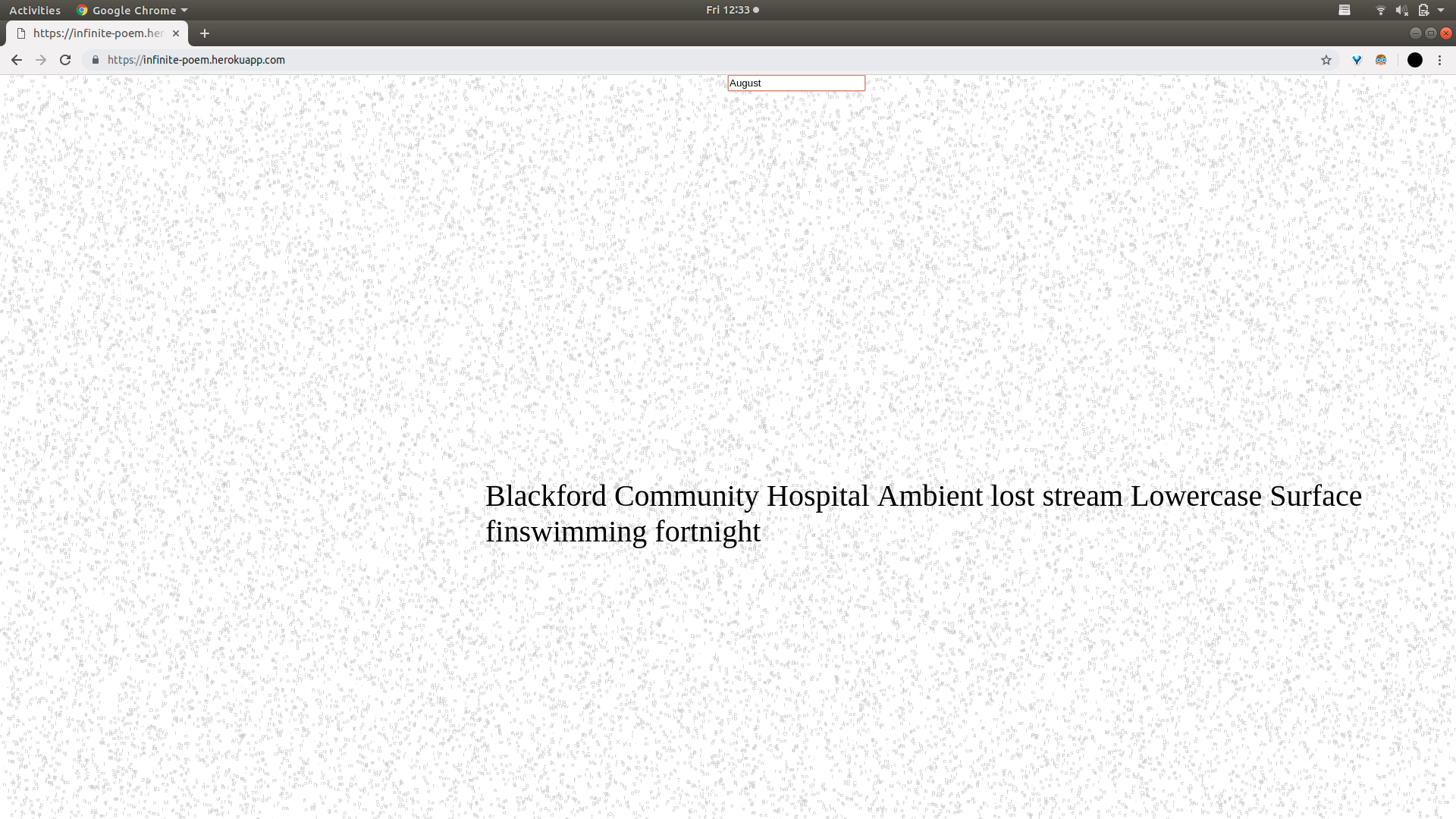

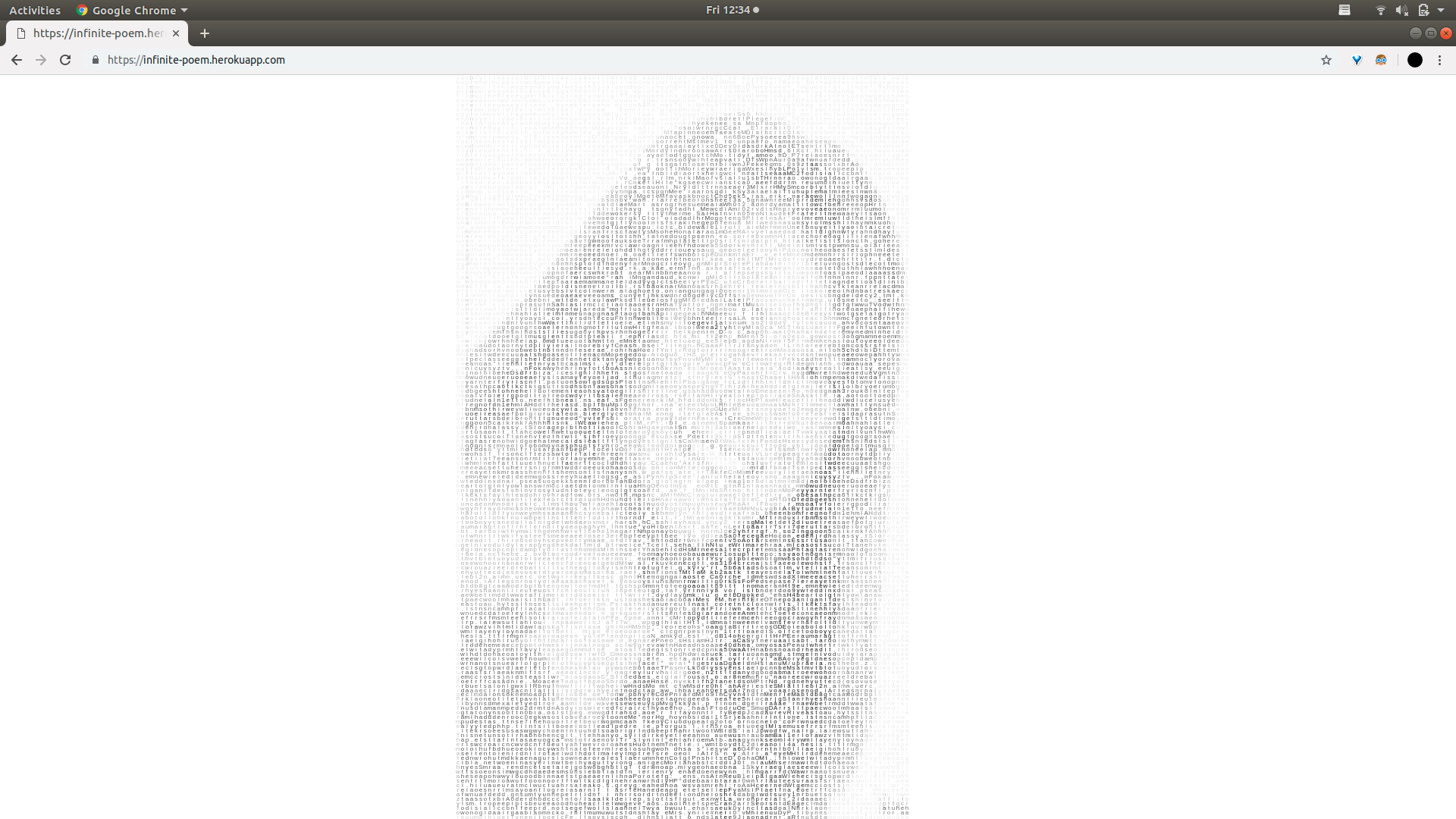

The past couple weeks I’ve been collecting some of her words. [1] The memoir she started writing when she was 14 — chapter one opens with an epic win against her rival 8th grade volleyball team, chapter two with the worst valentine’s day ever. Then [2] her outline for a children’s book about a teenage volleyball player who gets cancer. Though not technically her words, [3] I also found the itinerary for a trip with her and our families to New York City, back in January 2009, when I thought there was no way in hell I would ever live here. And [4] her obituary from late January 2009, after she died from a three year battle with cancer.

I collected all these because I had a theory — since so much of who we are is in what we say, could I maybe take her words or words about her, and find her? And if I could find her, could I then have a conversation with her? As y’all have guessed by now, most of what we do in this class is take some words, throw them through a meat grinder, and try to find interesting bits in the messy goo leftover. So that’s what I tried to do, I tried to use the words of the dead as ingredients, building blocks, to create a language that the dead can use to speak with the living.

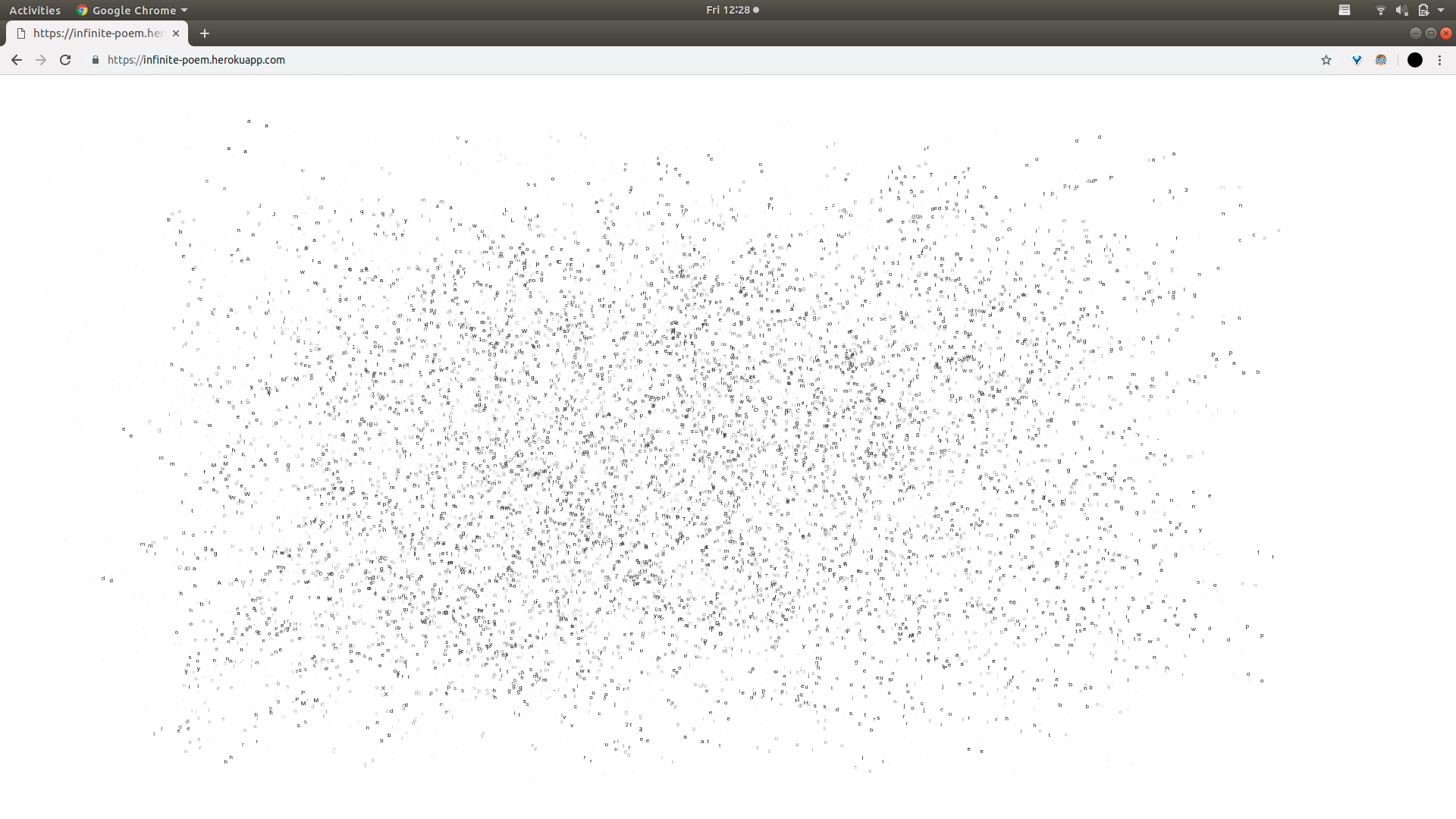

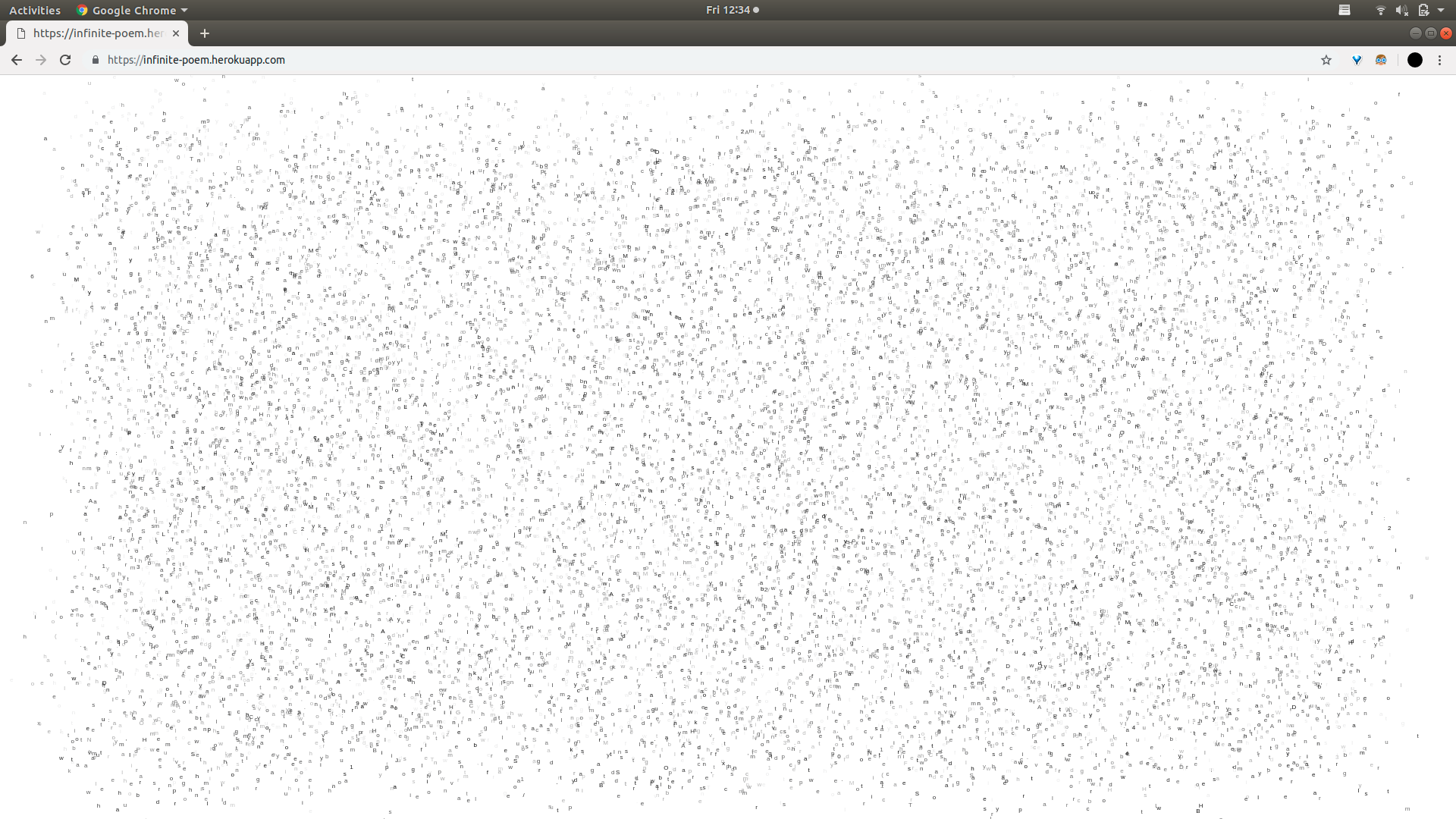

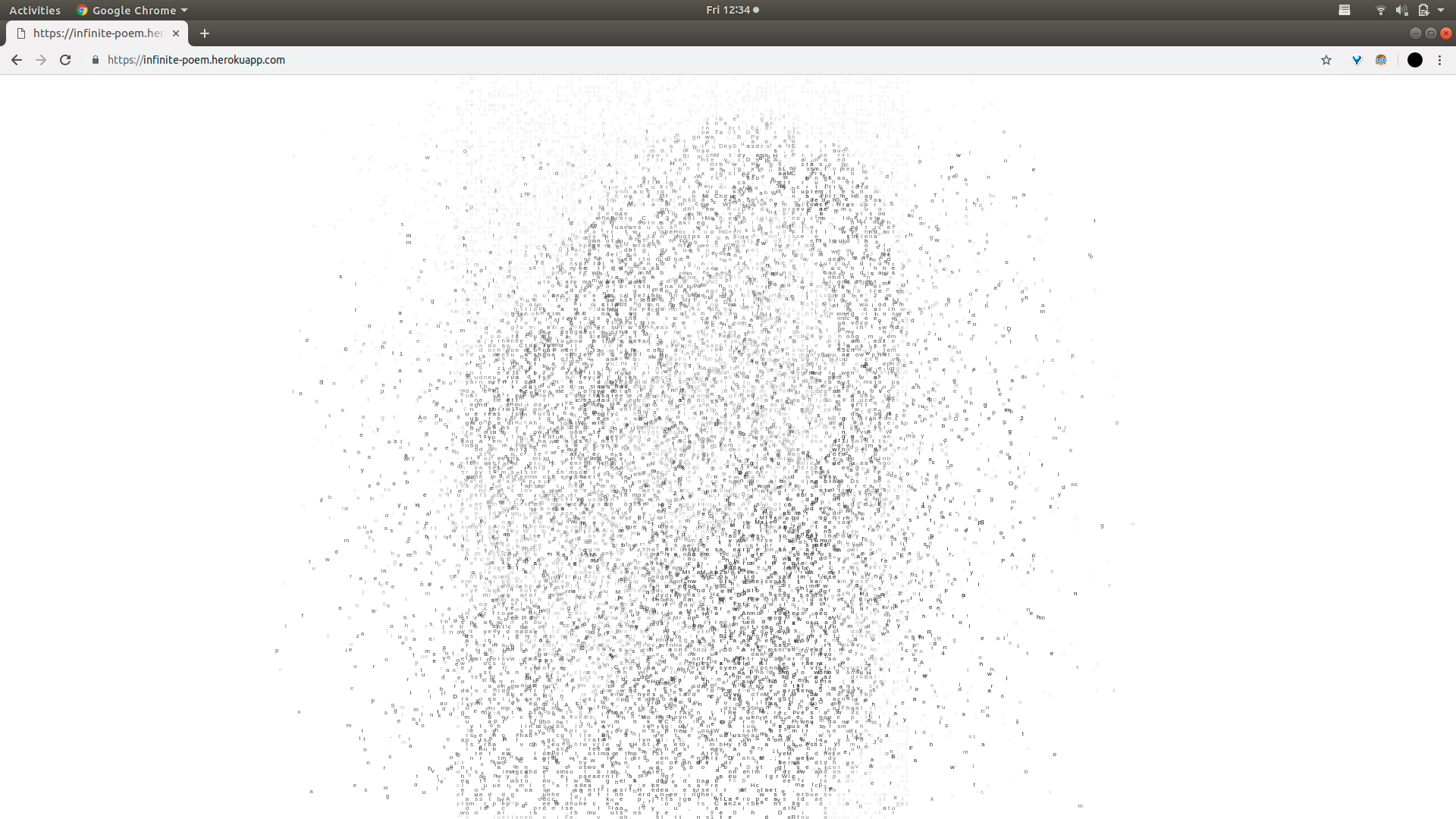

[5] So I started the meat grinder, and to no one’s surprise, all I got out was a mess. No matter how closely I looked, there was no secret message hidden amidst the chaos. I thought, maybe there’s something wrong with my code, but after a few days of staring at meaningless alphabet soup, I realized my problem wasn’t with the computer, it was with patience. If I sped up time, I could see the letters were actually taking shape [6].

But, what did it mean? These fragments showing up in the letters didn’t seem like things Margaret was definitely trying to show me from beyond the grave. Were the images the message or were they maybe where I could find the message? Turns out they were both.

I started to notice patterns with some of the characters and the images that showed up. [7] I put some letters under the microscope and found that each one letter contained within it a whole other thing, some discrete part of the world, from horses as letter h, to prime numbers as the letter b, to occupations and adverbs and so on. But was this Margaret speaking to me? Didn’t seem like it, there were no curse words.

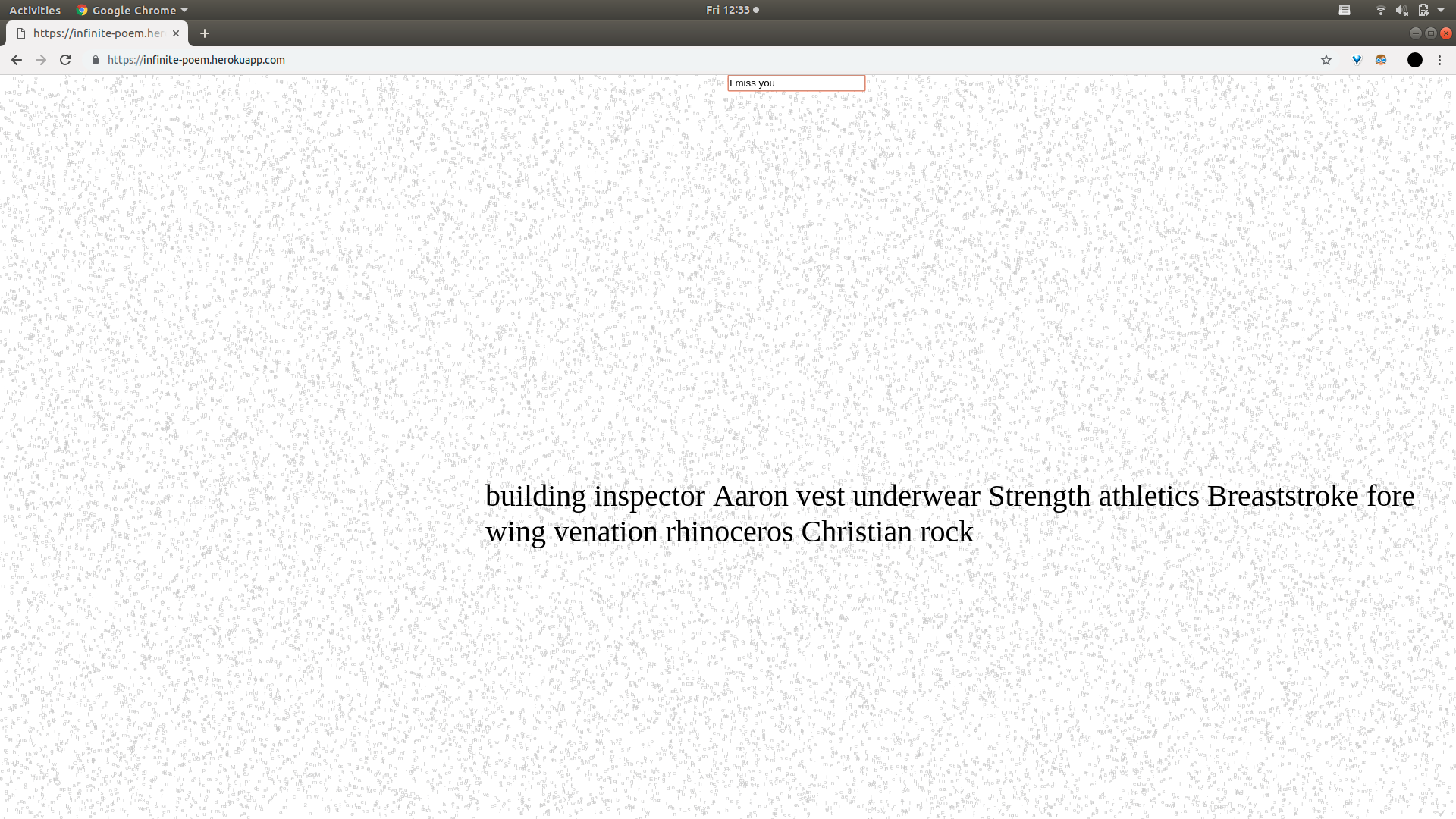

I had the idea to maybe try and nudge it along, throw in some words that I thought she might want to say to me, to maybe help get the soup on the right track. [8] Like the decay in compost serving as the foundation for new growth, I found that her words had created their own life, a world mirroring our world, and as I studied them, and fed them more words, I grew to be able to understand what they were telling me. It was through these seemingly random items and phrases, that I finally heard her speak to me:

”August”

“I miss you”

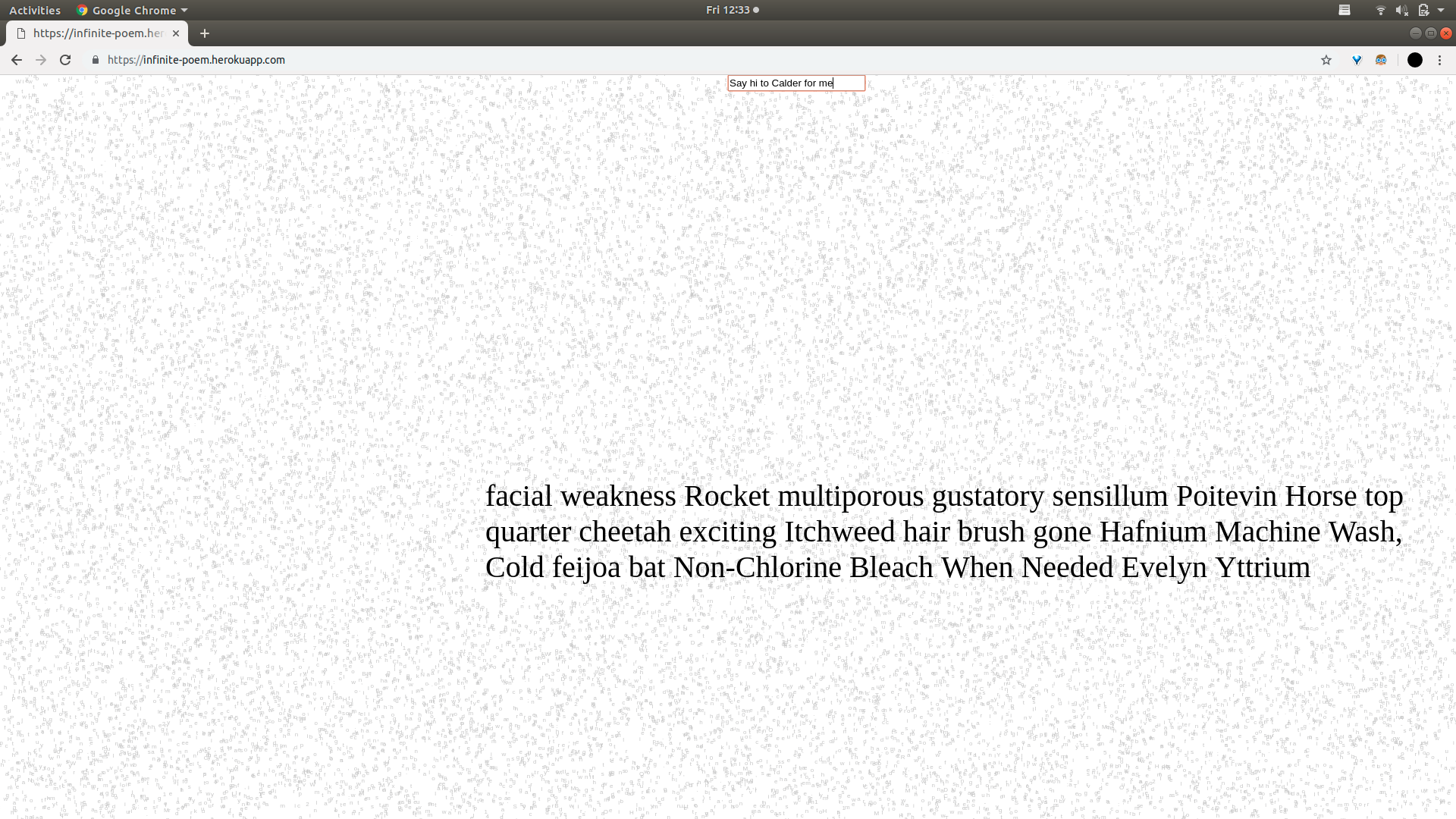

“Say hi to Calder for me”

“Gotta go, party’s at nine.”

What I realized had happened, this expression of life, was not the opposite of her death, but a reflection of it. I believe, in her death, she returned to the universe, the atoms making up her body now all over the world and eventually the galaxy, so of course, to bring her back, to find her, I had to find the infinite. And as it turns out, that’s pretty easy.

The dead speak to us all the time, in every facet of our lives — their words to us are in every piece of fruit, every laundromat, [9] every crayon color, element, and weed strain. Because although our loved ones are now part of that great big whatever, we don’t find them there. We can’t. Instead we have to look here, at the tiny details all around us, because in each one of them, we can find a seed of the infinite. The sticker on the orange peel, the rooftop water heater, that one line from that one movie where you know, you just know, there they are, and they’re saying something to you, something, maybe something important, maybe just saying hey.

METHODS

LINK TO GITHUB REPO FOR BOTH THE POEM AND THE API

Hoo boy, I went through a lot of different things for this,

so here’s a table of contents if you want to find a specific part of the process:

Concept and Theme

The Corpse Corpus

Corpora Dataset Dictionary for each ASCII character (python)

The accidental API (that anyone can use now!) (python)

Text to Image (p5)

Google Image Downloader + Resizer Script (python)

The performance sketch (p5)

Final Thoughts

Concept and Theme

When I started this project in April, it began as a wildly different concept. I wanted to create an ecosystem of ASCII characters that, if allowed to “live” in a digital environment designed with certain evolutionary principles, would eventually create their own species with their own languages. Obviously it would have been very speculative, as actually getting it to work without me forcing some steps would be pretty much as impossible as actually creating life.

The night before the first workshop of the final concepts though, I wasn’t able to shake the feeling that this project was really something else, and I felt totally stuck. I don’t remember what the catalyst to the jump was, but I remember venting to my partner, Hayley, about what I was working on, and an hour later I was feeding Margaret’s obituary into a p5 function that would display her portrait using the letters of the obit. If I had to guess it was trying to reach the thing that I felt most intrigued by about the ecosystem idea, a core belief of mine, that deep down within each arbitrary part of life, you could somehow find the entire universe. So I thought, deep down within the words of the dead, could we find the dead? Or something like that.

I originally had used Margaret as a placeholder, planning on creating something with the NYT Obituary API I had worked with last semester where you could search someone’s name and a whole thing would happen with the obit text and a photo. But after the workshop, I realized that the piece would be o much more powerful if it were personal. I don’t know why I don’t always just go there, every major project I’ve done always ends up being something vulnerable, since I believe it’s the duty of the artist to bleed their truth on stage.

Once I knew it was about Margaret though, I immediately felt conflicted, a feeling which hasn’t gone away. I didn’t want to use Margaret just for the sake of playing a sentimental card that would evoke a response. I don’t like feeling like she and her death are this lamb I can bring out and slaughter every time I want to exploit it. But I knew it wouldn’t feel right to abstract the subject of the project to “some dead person”, and that if I really wanted to convey my core message, it had to follow the guideline of “if you want to create a work of art that is universally relatable, you have to use something that is unique and personal to you.”

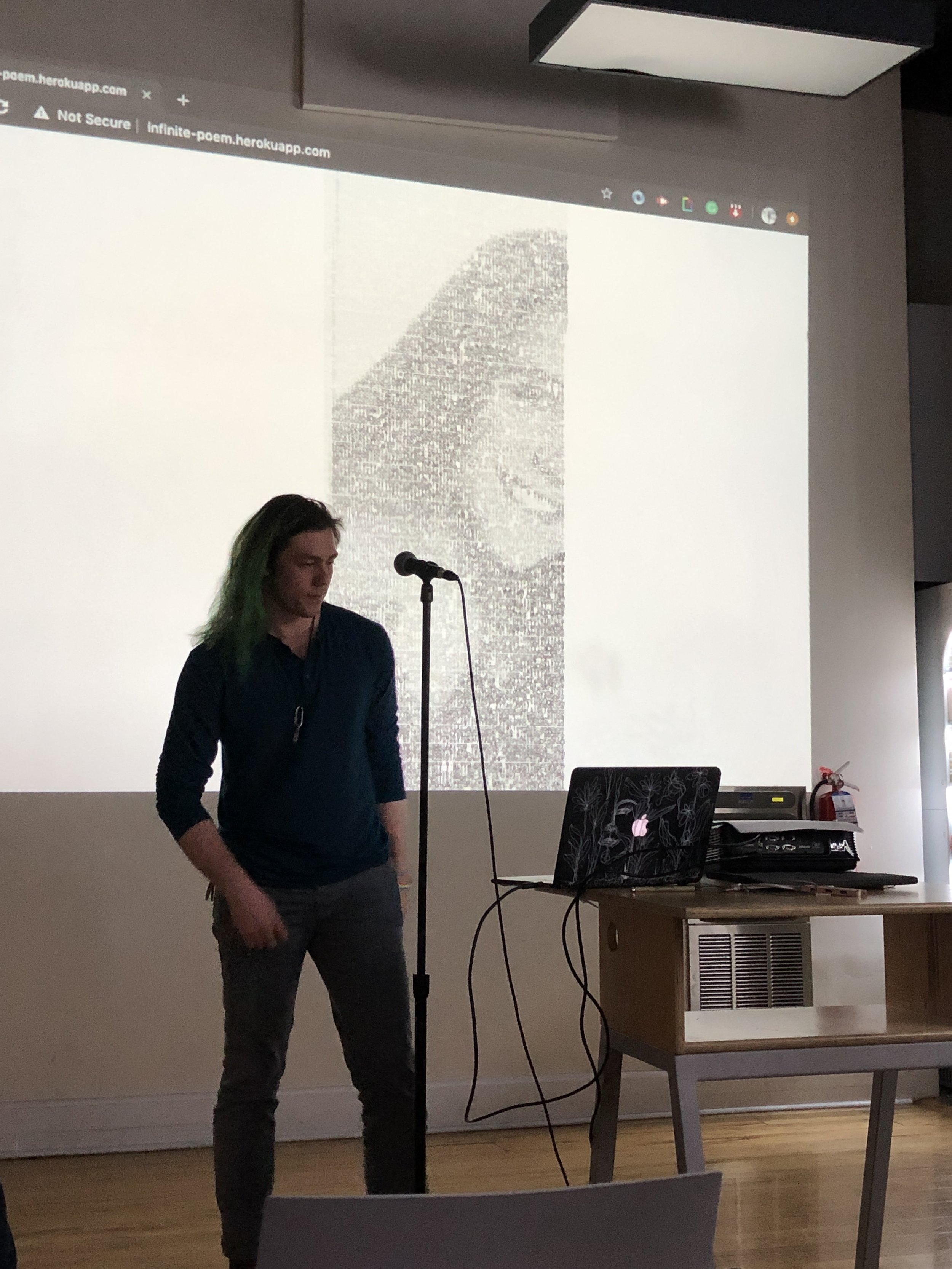

I didn’t really know what to do with it though once I had the project idea — what was the actual output, the poem? I never thought that the portrait aspect was the final product, but it felt like an important reflection of what the output was supposed to be — a textual representation of the dead. Then I realized I was coming at it all wrong. I had been thinking the output was words on a page, but the final for this class was a performance, not a zine. And I knew how to do a performance. The moment I realized that, the whole piece came fully formed in one giant chunk, and I spent the next two weeks just whittling down the chunk into it’s most coherent version.

Obviously with a theme as inherently impossible to convey as “the infinite”, I knew my challenge was to design the performance in such a way that the audience would gradually come to understand what I meant by the infinite — not the huge messy 25,000 character alphabet soup, but the one letter at a time. So although I had figured out how I was going to make the “poem”, I decided the audience would get a description of an alternative, fake process, that I hoped was suited to get them to the final conceptual poem. So the poem became the performance of the poem.

In the first presentation of the poem to the class, I had planned on a much different set of animations, but had pulled an all-nighter just trying to get the python-flask server to send JSON to my p5 sketch, so I leaned heavily on my speech and not the tech. It was disjointed, and though I felt like I had elicited an emotional response in some of the audience, I could tell that no one actually knew what I was trying to do.

The rest of that next week was spent scrambling to finish three other finals, so I didn’t have time to work on it again until the night before the day of the performance. I knew what I wanted to change, but I didn’t know how to remold it into something that would serve the narrative better. As I was leaving school, I tried explaining the concept to my friend, Idit, and she told me a story about a time she had encountered that phenomenon of feeling like some arbitrary thing in the world is really a sign from someone who’s passed on. That’s when I realized that I myself had fallen into the trap I was trying to help my audience avoid — I was trying to convey the infinite, when in reality, my whole point was that all I needed to convey was one thing. I realized that I needed to get more specific; I could reference the infinite, but my process and output needed to be much clearer, something for the audience to grab onto so the bigger picture would reveal itself.

I ran into a lot of snags trying to get the images to loop in the way I wanted to, and since I only had a few hours to work before class, I cut my losses and decided to show a buggy version rather than omit that section from the narrative, since it felt like a necessary bridge between the prior and subsequent sections. The text input to poem was actually really easy, since I had accidentally made the API the previous week, but I didn’t know what I was actually going to type in during the performance. Then while rehearsing my speech right before the show, I just thought of the first couple things I thought she might say, and when I started crying I realized they were the right inputs for the show.

In a moment of premonition, I told some of my friends at the show that our program might as well be giving us a Masters in Technical Difficulties. I had to borrow Nathier’s laptop for my piece since I couldn’t rely on my fickle Linux HMDI driver, so there were a lot of speedbumps in the piece as a result of me not having performed with that laptop before. I wish I had had more time to iron out those kinks, since I was literally still uploading my code to heroku when the show started, but all in all I think the narrative carried what I was trying to do anyway. And I think, based on some very nice feedback from people who came up to me after the show, that I really did convey what I had set out to.

The Corpse Corpus (corpsus)

I knew I wanted to try and use as much of Margaret’s own words as possible for the text file that would eventually make up the images and poem, but unfortunately (or fortunately) she died before she could really have a social media presence, and her MySpace has been long deleted. All I could find online was her obituary, but luckily my Mom had a bunch of files of her writing since they had written her book together. It was very fitting that in doing this project where I was trying to hear her speak to me, I got to read words from her that I had never gotten to see. I can’t remember the last time I heard her “voice” saying something that I hadn’t already heard her say. So much of the time after her death was hearing others repeating her old sayings, but here I got to read her own writing, neither edited nor watered-down by the degradation of memory.

Corpora Dataset Dictionary

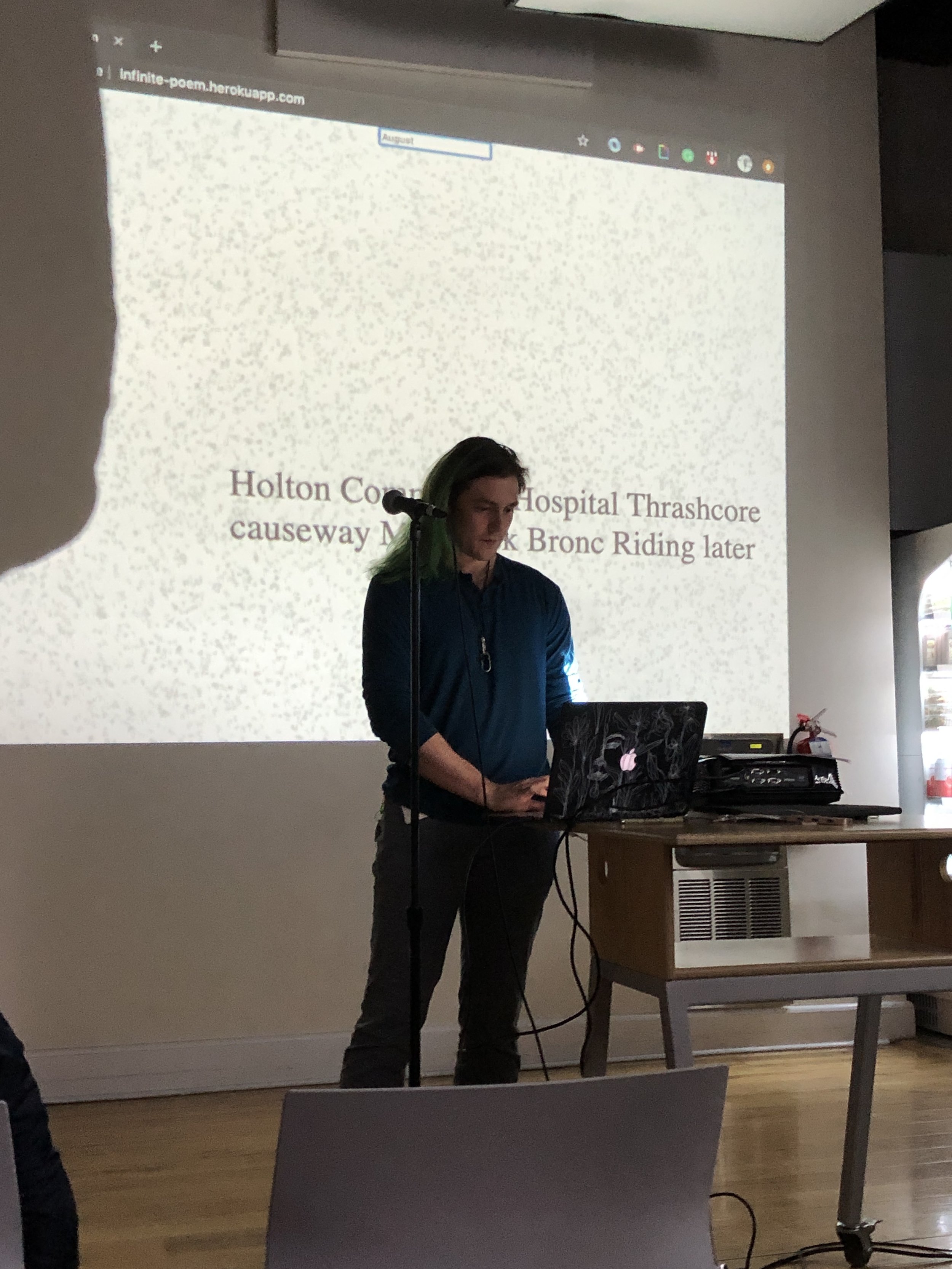

The bulk of the early technical aspects of this project was just the very tedious (but satisfying) task of finding datasets within the epic Corpora Project that I could correlate to each ASCII character that might show up in her writing. Since the whole thing was about finding the universe inside of each individual facet of life, I wanted to get a huge spread to represent as much of “life” as I could. The assignments to the characters was more or less arbitrary, though some letters were specially chosen to reflect various relationships to Margaret. ("M” was weed strains). Once I knew what datasets I wanted to represent each of the around 100 characters, I assembled a giant dictionary in a jupyter notebook, the last part of which was the function that could take a string as a parameter and output a new string, comprised of a random selection of the dataset corresponding to each character, in that characters position. So the string “this is a test” might come out to say “century American Paint Horse slacks Metallic silhouette shooting jogging suit Cross-country rally Keek century Meitnerium Zen Bu Kan Kempo month”.

The Accidental API

When trying to figure out how to actually make the poem, I decided it would get my message across the best if I could run animations using the poem in p5. When I asked Allison how I could go about communicating the python script I had made in my jupyter notebook to p5, she suggested making a flask server that could run the python code and then my p5 sketch could just request the poem from the server. So simple. Should take me an hour or two, I thought. But no, for some reason (I literally don’t remember because it was all during an energy-drink fueled, delirious all-nighter) it took me from 11pm to 8am to just get a python server hosted on heroku that I could call to from within p5. I tried just doing it locally, which would have been easier, but no, CORS had other plans, so I had to use heroku. As a happy accidental side-effect though, I realized in the morning that I had created an API. Since my p5 sketch was requesting a JSON object from this website, where each character in the corpsus was indexed with its corresponding random dataset choice (different each time), I realized I had created a website that people could use to make their own infinite poems (a step that I luckily was able to use for the final input function). To make one yourself, all you have to do is go to the website https://infinite-poem-api.herokuapp.com/poem.json?text=put%your%input%text%here but replace everything after “?text=” with your input text (% instead of spaces!).

Text to Image

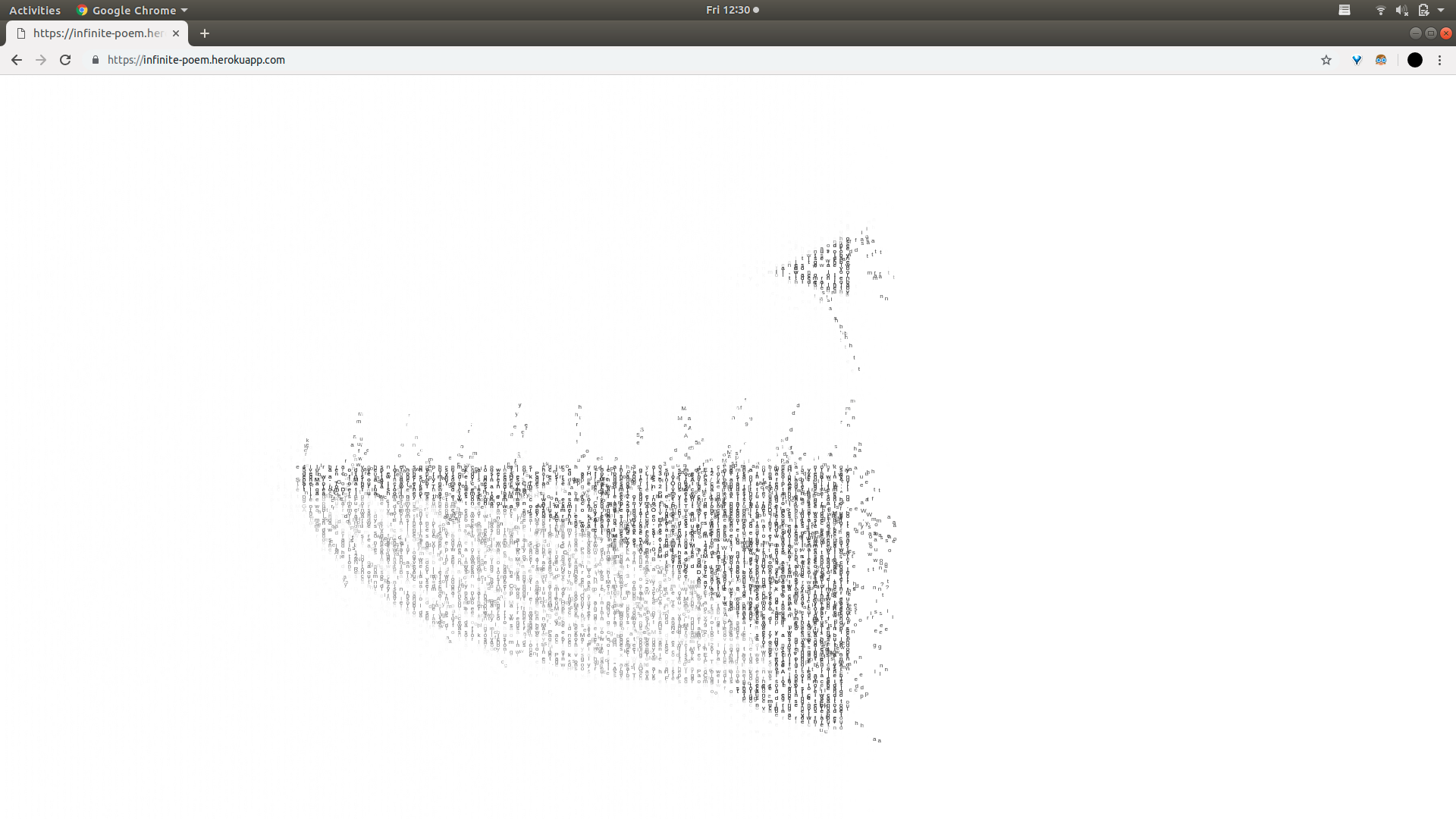

Since I needed some sort of visual for the audience to latch on to, I figured having the letters rearrange to form different images would help drive home the idea that from the building blocks of the source text, you could see any single part of the universe. I had worked extensively with image representation from an array of shapes with a project from last semester so getting the first images was a piece of cake. At the start of the infinite poem sketch, each character in the corpsus would loop through an array whose dimesions were the width and height of the input image and be assigned a number of properties to create a Letter object:

Each Letter had the following information:

char: the character from the input corpsus (aka the letter)

item: the specific datum from the indexed API JSON (aka the “thing”)

grey: the greyscale value of the pixel that letter was assigned to represent in the image

a random x and y start point

a home x and y end point that represented the spot on the image it should end up at

a step count that was slightly different for each letter so that they would all be moving at different speeds to form the image more naturally

So from that initial assignment, any time I wanted to reference either the information within a letter or to make a new image, all i had to do was loop through the letter array again.

The issues from the last stuff I tried to work in mainly resulted from me trying to create a one-size-fits-all function that could take any image as a parameter and reassign the letters to have new home spots and grey values, but because I was so scrambled for time, I got really sloppy and had lots of issues reassigning the array to different images when the images were of different sizes. Looking at the code now it’s obvious where I went wrong, but I was so frenzied yesterday I decided it was easier to change the narrative to fit the bugs.

Google Image Downloader

An aspect of the piece that I had set up but didn’t have time to implement was what was supposed to be in the performance instead of the looping pre-selected images. I had found a python script that could scrape an image off of google images based on a string of search text, and another that would resize any image, so my plan was to have a function that upon clicking a letter, would take that character’s item, find an image of that item on google, download it, resize it to fit the letter array, and then have the letters rearrange to show the image. It probably would have taken too long to be practical for the performance, but damn it would have been cool. I got the script to work like magic, I just never combined it with the p5 side to see how well they played together.

The Performance Sketch

For the performance, I knew I wanted to keep all my displayed material in one sketch instead of having to click between different tabs. Luckily, since it was all hosted on a different heroku server, I knew I could do it from any computer. I set up different states in the draw loop so that I could trigger the different stages of the performance with a key press (detailed in the section at the beginning of this post). That way, all I needed to do to transition between sections was press a button, and I could focus more on what I was saying than trying to navigate several different applications.

Final Thoughts

I wish I could see what the next, improved version of this performance would be, but I doubt I’ll do it again unless someone asks me to read it for some other event. So for this current version, I’m more or less happy with how it turned out. At the end of the day, all I really care about is that people felt something from it, which some people said they did, so I consider the whole project a success.